What does the term "black box" really mean in machine learning?

tl;dr: black box refers to machine learning models that can’t be easily interpreted. If they could be interpreted, the models are so large and complex that the interpretation would be convoluted and unhelpful. There are, however, techniques available that give approximate interpretations, but they’re flawed.

Definition of black box

From Wikipedia:

In science, computing, and engineering, a black box is a system which can be viewed in terms of its inputs and outputs (or transfer characteristics), without any knowledge of its internal workings.

The term black box is often applied to deep neural networks. So when people say we a deep neural network is a black box, what exactly does that mean?

Quick primer on neural networks

For a quick primer on neural networks from my explainer post:

An artificial neural network is a machine learning model designed to act like a biological brain. The model uses nodes (inspired by neurons) connected by edges (inspired by synapses) that are organized into layers.

The input layer comprises of inputs nodes, which are your input or predictor variables. These input nodes have weights or coefficients.

The output layer contains output nodes, which give you the output of your model (i.e. the prediction or response variable).

Between the input and the output layers, exists one or more hidden layers. The nodes in the hidden layer learn patterns in your data — but unlike the input nodes, you don’t specify what they represent. The weights connecting the hidden layers will be determined by training the model on your data (or in other words finding a collection of weights that best map the input data to the output data). The author of the neural network decides how many hidden layers and nodes to include.

Let’s start with what we do know about neural nets

Black box doesn’t mean we don’t know anything. We do know the following:

We know the mathematical structure of the model1.

We know the data that was used to train the model.

We know the algorithms2 used to calculate and update the parameters.

Once the model is trained, we can see the parameters (i.e. weights and biases).

When we run the model on new data, we can observe the input and the output.

So what do we not know?

Interpretability

We don’t know why the model’s parameters work. In other words, we can’t interpret the effects of individual parameters (i.e. the weights/coefficients and the bias).

Unlike linear regression, we cannot measure the effects of weights/coefficients.

We can interpret linear regression

In linear regression, each variable’s coefficient directly tells you its effect on the output.

For example:

Car Price = $20,000 + (-1,000 * Age) + (-.1 * Miles)We can say that for every year the car ages, its price falls by $1,000. And for every mile it drives, its price falls by 10 cents.

Neural networks are non-linear

From my neural network explainer post:

a neural network is a series of linear combination equations that get passed into activations functions, which in turn get passed as inputs into more linear combination equations, which are then passed into another activation function.

These activation functions are like light switches, and whether or not they get turned on depends on what’s going on in different parts of the neural network.

It’s not straightforward to say this node has this weight — therefore it will have this fixed effect on the output.

Neural networks have a large number of parameters, and complex interactions

Let’s look at a complex model like GPT-4.

From Wikipedia:

Rumors claim that GPT-4 has 1.76 trillion parameters, which was first estimated by the speed it was running and by George Hotz

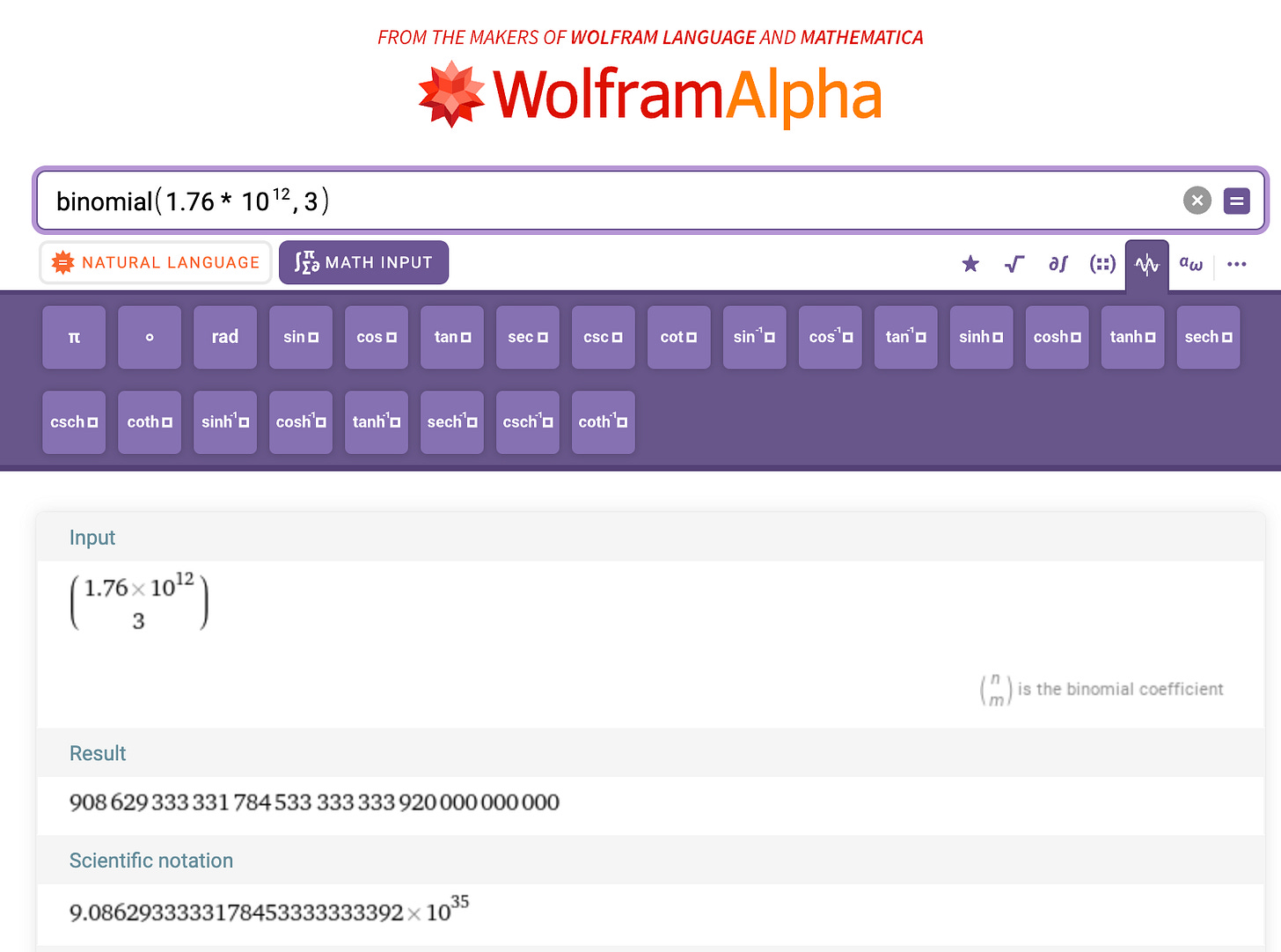

That’s a lot of parameters. If we wanted to explore the combinations of 3-way interactions across all these parameters, we can calculate the binomial function.

So in American English, we would say that’s around 909 decillion interactions.

You can imagine that even if there was a hypothetically perfect and exact way to interpret the effects of each node’s effect on the output, as well as its corresponding interactions, this interpretation would be so lengthy and convoluted that it would be impossible for a human to make comprehensible or useful.

What tools are available for interpretability

There are a variety of techniques available that attempt to give approximate interpretations into the effects of weights in neural networks. One of the main ones comes from the field of Game Theory and is called SHAP (SHapley Additive exPlanations). SHAP works by calculating Shapley values.

The Shapley value represents the average contribution of a specific input feature to the model’s output.

The SHAP algorithm calculates these values by simulating all possible combinations of input features (note input features and total number of parameters across all nodes - from input to hidden layers is a very different thing).

SHAP is limited in 2 main ways:

it only gives an approximate of all the total interactions in the model — this can lead to an incomplete or misleading interpretation.

the interpretation can still be very complex for humans to understand and make actionable.

Closing thoughts:

Black box doesn’t mean we don’t understand how the model works — it means we don’t understand why the model works. This can lead to unexpected behavior because machine learning models do not possess common sense.

Along with hyperparameters (e.g. number of layers, number of nodes per layer, learning rate, batch size, epochs)