In the 1970 book Future Shock, the late Alvin Toffler describes how the pace of technological development isn’t progressing linearly, but instead is accelerating exponentially. Just as a car accelerating can make you nauseous, the rate of technological acceleration can leave even the most “tech-savvy” person alive completely overwhelmed (or in other words, in a state of Future Shock).

To survive, to avert what we have termed future shock, the individual must become infinitely more adaptable and capable than ever before. He must search out totally new ways to anchor himself, for all the old roots—religion, nation, community, family, or profession— are now shaking under the hurricane impact of the accelerative thrust. Before he can do so, however, he must understand in greater detail how the effects of acceleration penetrate his personal life, creep into his behavior and alter the quality of existence. He must, in other words, understand transience.

Short-term concerns around AI typically center around the job market and economic disruption. Medium-term concerns revolve around machines amplifying humanity’s worse instincts (i.e. throughout history, we’e been trying to kill each other). And long term concerns typically center around machines taking over.

I think all of these concerns are valid. While I think making predictions is fruitless, I do want to record my current thinking on these different categories of concern.

Short term - jobs

I’m going to focus this section solely on programming jobs.

If AI can write code, doesn’t that mean it will take the jobs of programmers?

The job of a programmer is not to write code. A programmer’s job involves thinking about how to solve a problem, then putting those thoughts into writing that a machine can understand. I like the following quote1:

Writing is thinking

Programming in many ways is a subset of writing2. It’s an act of translating your thoughts into something interpretable.

Programmers can direct the current machine learning models to help them clarify their thoughts, free up mental resources, and reduce toil.

If a programmer’s job is very repetitive and doesn’t require active thinking, I think that job function will be replaced with automation. However, when the future comes we will also have new job functions that we can’t anticipate today.

Will the net creation of jobs outweigh the reduction in jobs? Who knows. If machines do take on more essential functions, I could see more human effort being allocated to the leisure industry (e.g. media, entertainment, sports, gaming, etc.), as well as aspirational efforts (e.g. scientific discovery, art, ambitious engineering projects).

However, these areas are already being impacted heavily by AI — discovery systems are being employed for doing science, and generative art for creating media.

The marriage of virtual reality with generative art could make for ridiculously immersive and engaging digital experiences that eclipse existing entertainment options in terms of their capacity for escapism.

Medium term - humanity’s worse instincts

When a new technology emerges, people need to first play with it to understand how it works.

It takes some time before it can be integrated into mission-critical or safety-critical systems. Geoffrey Moore in Crossing the Chasm describes early tech as toys. Chris Dixon captures this idea below:

Disruptive technologies are dismissed as toys because when they are first launched they “undershoot” user needs. The first telephone could only carry voices a mile or two. The leading telco of the time, Western Union, passed on acquiring the phone because they didn’t see how it could possibly be useful to businesses and railroads – their primary customers

Any great technology has potential for uniting and uplifting people or dividing and destroying people. Steel can build stronger weapons or safer bridges. Nuclear power can provide sustainable energy or unleash unspeakable devastation.

Pairing weapon systems with AI is already happening today — a common use case being autonomous drones.

The idea of mutually assured destruction has unfortunately been around for a while, and disarmament of WMD’s is an ongoing effort. Should AI-enabled weapons (even if they are low cost, non-nuclear systems) be treated and regulated as WMD’s?

Long term - loss of control

With the current technology available today, humans are steering the machines.

If machines become more intelligent than humans, there could be opportunities for humans to lose control of the machine’s behavior.

What would a machine’s motivations be if they use their intelligence to buck the safety controls we try to impose?

Would the machine have Darwinistic instincts like humans do3 — doing anything to preserve themselves, including eliminating us (i.e. The Terminator) or using us as batteries (i.e. The Matrix)?

Would the machines have no interest in “taking over” and just do their own thing (i.e. Her)?

Would the machines accidentally do something that irreversibly harms human by accident while trying to protect us (i.e. Hal in 2001: A Space Odyssey)?

Is intelligence a scalar or a vector?

Machines and humans are very different things. If machine intelligence eventually exceeds human intelligence, will it be strictly better than humans or will there still be holdout areas that humans remain better?

The Hitchhikers guide to the galaxy jokes that human’s are the third most intelligent creature on Earth. There are animals that arguably outperform humans in some cognitive abilities. Cephalopods have a distributed nervous system (over 50% of their neurons are in their arms) that allows them to multitask incredibly well (something humans don’t do well).

What if comparing humans to machines no longer becomes relevant and machines and humans merge into one composite cyborg (i.e. Blade Runner)? There’s a lot of money being thrown at longevity research and AI — maybe we’ll see a convergence of these fields sooner than later?

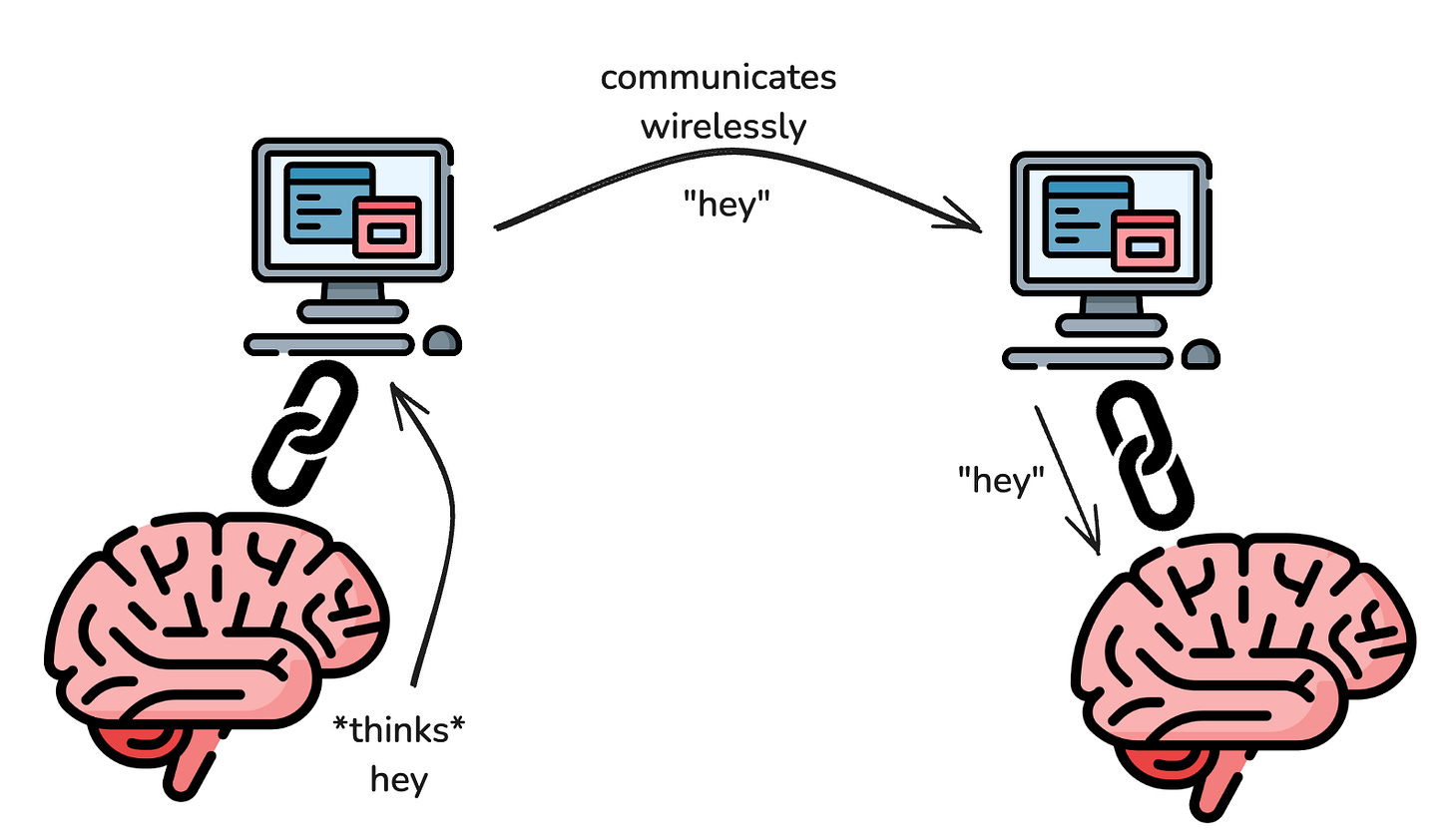

One current technology exploring this avenue is the brain-computer-interface (BCI). The first commercial BCI system was BrainGate which began in the early 2000’s. Neuralink has popularized this budding industry.

BCI

BCI has amazing potential to help people with neurological disorders or paralysis. There are use cases today where a machine can enable someone to control a cursor using brain waves detected by an electrode. It’s also being explored to help people who have lost limbs to control robotic prosthetics.

If BCI continues to advance, it could lead to some freaky things, like telepathy.

If a human can use brainwaves to speak to a computer, and a computer can easily communicate with another computer, and the receiving computer can influence that human’s brainwaves — that’s telepathy.

Quick aside on BCI in animals

BCI can also augment the capabilities of less intelligent lifeforms. Researchers are already working on ways to turn bugs into drones:

The “biobots” are also easier to handle than mechanical drones. Users can let nature take its course rather than constantly monitoring and tweaking man-made drones that might hit objects or lose altitude. Beetles control all that naturally. “By sending a signal to the beetle, we are able to simply change its direction of movement and the beetle will manage the rest”

Neuralink has been criticized for using macaque monkeys in their experiments (some of which died during trials).

What if humans starting deploying computer-augmented monkeys to perform labor for humans? That sounds like a serious animal welfare concern — and an easy way to accelerate the Planet of the Apes plot.

BCI + AI

The element of bi-directional BCI that I find troubling is if machines begin to approach or exceed human intelligence, could the script be flipped where machines begin steering the humans.

If an intelligent machine has a direct interface to our body’s central command center, what would the implications be? What intentions or incentives might the machine have?

This lack of agency and outside influence on our consciousness, thoughts, and actions is a troubling thought.

However, these concerns might not be completely novel problems. We might already have outsiders influencing our mood, thoughts, and behaviors. And the idea of agency may be fuzzy at best.

Outsiders and effect of multiple “brains”

An intelligent computer connected directly to your brain (the central organ of the central nervous system) would be like having 2 brains. Some scientists call the enteric nervous system (ENS) our second brain. While scientists originally believed the ENS to be only responsible for digestion-related tasks, new findings suggest the ENS influences our mood and behaviors by exchanging informations regularly with the central nervous system through the vagus nerve and other connections (hence the term gut-brain connection). Additionally, scientists believe the ENS to be heavily influenced by ~40 trillion distinct lifeforms (i.e. gut flora) inhabiting our insides.

Are agency and individualism illusions?

Many religious beliefs question whether humans have agency, suggesting our fate is predetermined4. Other religions believe that every individual is actually only a component of a larger interconnected spiritual being5.

The pop-science book Incognito explores how there isn’t really a single voice inside our brain. Instead there is a “chorus” of voices, where the loudest one at a given moment determines our conscious thoughts and behaviors.

Closing thoughts

It’s really hard to predict how AI will develop and what it’s true impacts will be. We can look to the past, we can look to nature, we can look to sci-fi. But in the words of Tim Robinson, “anything can happen in this world, we really know very little.”

Unclear who first coined this phrase

Writing code isn’t the only way to program (e.g. visual programming and physical computing machines)

Thank you Tyler for this question

Calvinism and the concept of Qadar in Islam are 2 of many examples

Universal consciousness in Hinduism (Brahman) and dependent origination in Buddhism

Wow! I need to re-read....