Black boxes, common sense, and the cost of mistakes

Mind of their own

Machines today (2024) can beat humans at discrete tasks, but they do not possess general intelligence.

Even without general intelligence, machines sometimes appear to have a mind of their own when they do weird things.

The state-of-the-art AI applications today primarily use black-box machine learning models. We understand the math formulas in the models, and we understand that they work really well once trained on lots of data. But we don’t know why they work so well.

Another AI method that has fallen out of favor is rules-based systems. These systems are very easy to explain and interpret because they rely on if-then statements, but they don’t perform well compared to machine learning methods.

In a rules-based system, it is easier to add guardrails. It is also easier to program in common sense. Machine learning models often lack common sense:

Outside of funny behavior, like the image above, ML-based techniques will also give biased outputs if there is bias in the training data. This can have unexpected and dangerous results for important use cases (from redlining in lending to automated law enforcement use cases).

In the example of generative art mistakes, there are little costs to mistakes, but in safety-critical use cases, these mistakes have high costs.

The only issue with rules-based systems is they don’t perform nearly as well as ML approaches.

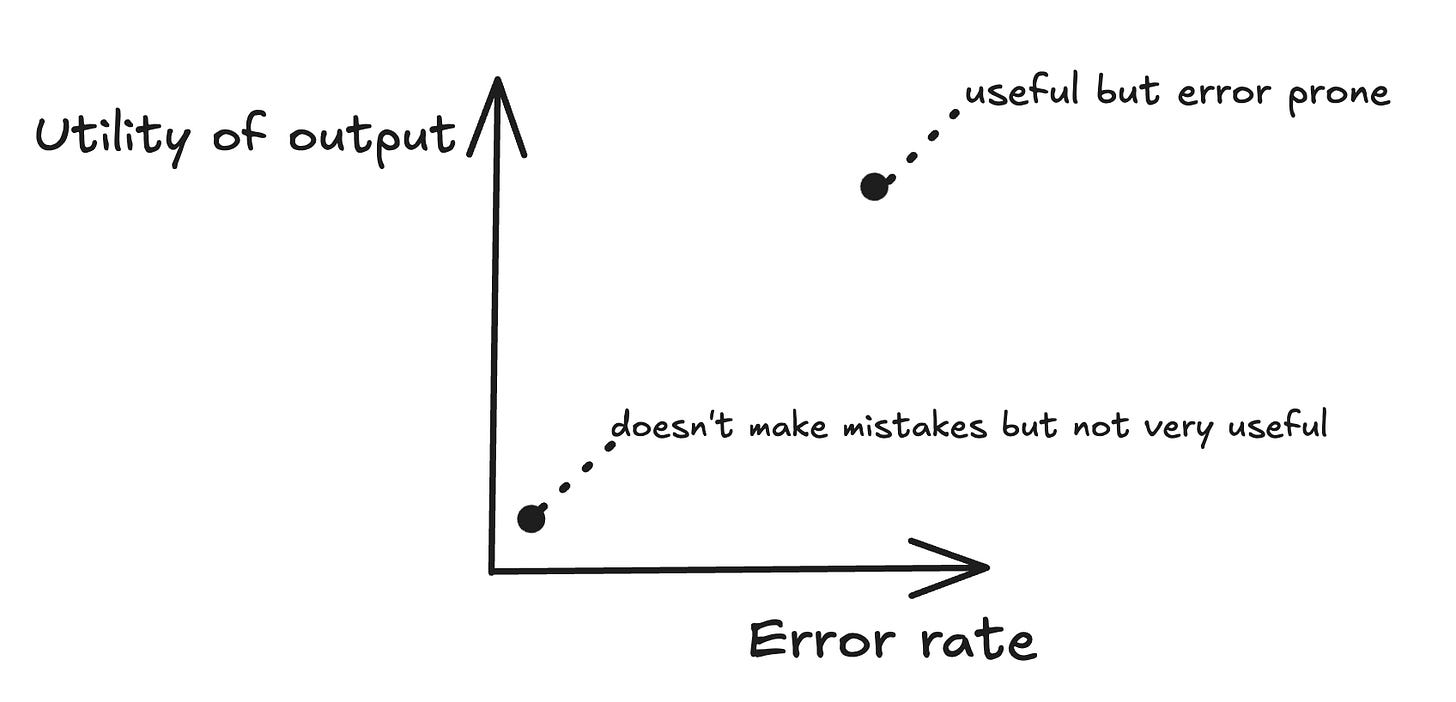

Managing this tradeoff will be an important ongoing problem in AI:

And I expect rules-based systems to make a comeback to help keep these black box systems on the rails:

Until ML interpretability is solved, the interplay between ML and rules-based systems will be important as AI applications become more ingrained into safety-critical systems.